Cascading Citation Expansion

CiteSpace cascading citation expansion has the potential to improve our understanding of the structure and dynamics of scientific knowledge.

Send us a link

CiteSpace cascading citation expansion has the potential to improve our understanding of the structure and dynamics of scientific knowledge.

New interface shifts from journal metrics to journal intelligence, offering richer data and greater transparency for comprehensive assessment.

A web application showing how successful universities or research-focused institutions collaborate.

Even after a paper’s retracted, it will continue to be cited - often by researchers who don’t realize the findings are problematic.

Although the Journal Impact Factor (JIF) is widely acknowledged to be a poor indicator of the quality of individual papers, it is used routinely to evaluate research and researchers. Here, we present a simple method for generating the citation distributions that underlie JIFs. Application of this straightforward protocol reveals the full extent of the skew of these distributions and the variation in citations received by published papers that is characteristic of all scientific journals. Although there are differences among journals across the spectrum of JIFs, the citation distributions overlap extensively, demonstrating that the citation performance of individual papers cannot be inferred from the JIF. We propose that this methodology be adopted by all journals as a move to greater transparency, one that should help to refocus attention on individual pieces of work and counter the inappropriate usage of JIFs during the process of research assessment.

A growing gap exists between an academic sector with little capacity for collective action and increasing demand for routine performance assessment by research organizations and funding agencies. This gap has been filled by database providers. By selecting and distributing research metrics, these commercial providers have gained a powerful role in defining de-facto standards of research excellence without being challenged by expert authority.

Google Scholar presents a broader view of the academic world because it has brought to light a great number of sources that were not previously visible.

As of May 2018, CORE has aggregated over 131 million article metadata records, 93 million abstracts, 11 million hosted and validated full texts and over 78 million direct links to research papers hosted on other websites.

The new exposure of peer review information through its public API provides opportunities for discoverability, analysis, and integration of tools.

Current bibliometric incentives discourage innovative studies and encourage scientists to shift their research to subjects already actively investigated.

Preprints are one of the fastest growing types of content in Crossref. The growth may well be approximately 30% for the past 2 years (compared to article growth of 2-3% for the same period).

LERU's paper discussing the eight pillars of Open Science identified by the European Commission: the future of scholarly publishing, FAIR data, the European Open Science Cloud, education and skills, rewards and incentives, next-generation metrics, research integrity, and citizen science.

A study identifies papers that stand the test of time. Fewer than two out of every 10,000 scientific papers remain influential in their field decades after publication, finds an analysis of five million articles published between 1980 and 1990.

When citation-based indicators are applied at the institutional or departmental level, rather than at the level of individual papers, surprisingly large correlations with peer review judgments can be observed.

By tying rewards to metrics, organisations risk incentivising gaming and encouraging behaviours that may be at odds with their larger purpose. The culture of short-termism engendered by metrics also impedes innovation and stifles the entrepreneurial element of human nature.

Simply adding an ‘open access’ option to the existing prestige-based journal system at ever increasing costs is not the fundamental change publishing needs, says Bianca Kramer and Jeroen Bosman

Academia has relied on citation count as the main way to measure the impact or importance of research, informing metrics such as the Impact Factor and the h-index. But how well do these metrics actually align with researchers’ subjective evaluation of impact and significance?

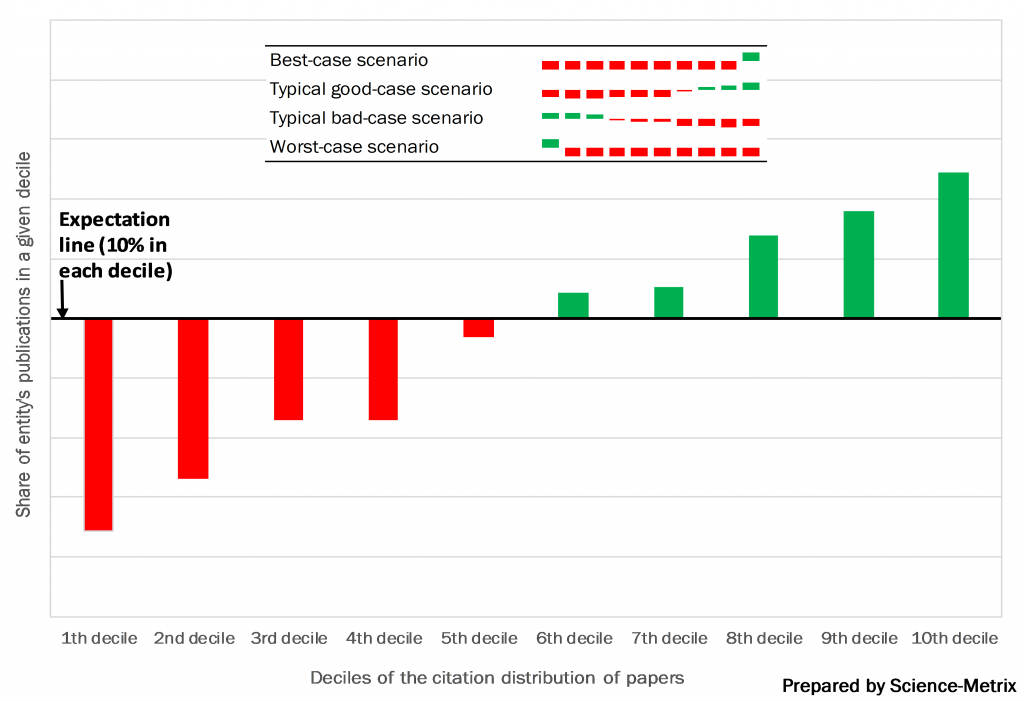

This post introduces the citation distribution index, an impact indicator developed by Science-Metrix to address many of the limitations of the average measures used in bibliometrics.

For the USA, this study finds, the entire history of science Noble prizes is described on a per capita basis to an astonishing accuracy by a single large productivity boost decaying at a continuously accelerating rate since its peak in 1972.

We call for bringing sanity back into scientific judgment exercises. Despite all number crunching, many judgments - be it about scientific output, scientists, or research institutions - will neither be unambiguous, uncontroversial, or testable by external standards nor can they be otherwise validated or objectified.

Sneha Kulkarni from Editage takes a look at the ever-increasing global scientific output, and asks questions about quantity versus quality.

Contrary to commonsense belief, attempts to measure productivity through performance metrics discourage initiative, innovation and risk-taking. The entrepreneurial element of human nature is stifled by metric fixation.

For the USA, the entire history of science Noble prizes is described on a per capita basis to an astonishing accuracy by a single large productivity boost decaying at a continuously accelerating rate since its peak in 1972.

Study aims to provide a detailed description of the free version of Dimensions (the new bibliographic database produced by Digital Science). An analysis of its coverage is carried out (comparing it Scopus and Google Scholar) in order to determine whether the bibliometric indicators offered by Dimensions have an order of magnitude significant enough to be used.

When researchers write, we don't just describe new findings - we place them in context by citing the work of others. Citations trace the lineage of ideas, connecting disparate lines of scholarship into a cohesive body of knowledge, and forming the basis of how we know what we know.

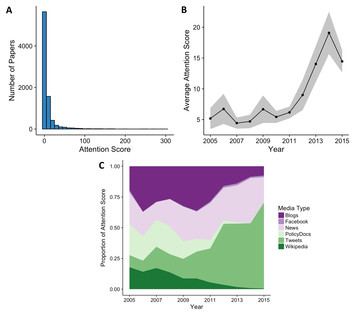

In recent years, increasing media exposure (measured by Altmetrics) did not relate to the equivalent citations as in earlier years; signaling a diminishing return on investment.

Some evidence showing that the more revisions a paper undergoes, the greater its subsequent recognition in terms of citation impact.

On average, papers with an institutional e-mail address receive more citations than other ones.