Move Beyond 'Publish or Perish' by Measuring Behaviours That Benefit Academia

Move Beyond 'Publish or Perish' by Measuring Behaviours That Benefit Academia

Send us a link

Measures intended to encourage openness are clashing with efforts to reform assessment

Clarivate has decided to continue indexing some content from eLife in Web of Science.

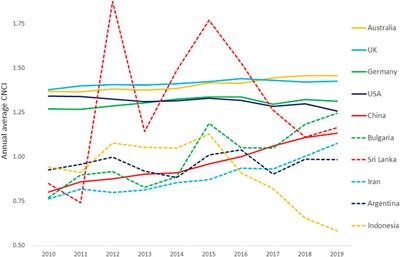

This study discusses the implications of research metrics as applied to the transition countries based on the framework of ten principles of the Leiden Manifesto. They can guide Central Asian policymakers in creating systems for a more objective evaluation of research performance based on globally recognized indicators.

The role of bibliometrics, such as impact factors and h-indices, in shaping research has been well documented. However, what function do these measures have beyond the institutional contexts in which, for better or worse, they were designed?

The focus on a narrow set of metrics leads to a lack of diversity in the types of leader and institution that win funding.

In over five years, Bornmann, Stefaner, de Moya Anegon, and Mutz (2014b) and Bornmann, Stefaner, de Moya Anegón, and Mutz (2014c, 2015) have published several releases of the www.excellencemapping.net tool revealing (clusters of) excellent institutions worldwide based on citation data. With the new release, a completely revised tool has been published. It is not only based on citation data (bibliometrics), but also Mendeley data (altmetrics). Thus, the institutional impact measurement of the tool has been expanded by focusing on additional status groups besides researchers such as students and librarians. Furthermore, the visualization of the data has been completely updated by improving the operability for the user and including new features such as institutional profile pages. In this paper, we describe the datasets for the current excellencemapping.net tool and the indicators applied. Furthermore, the underlying statistics for the tool and the use of the web application are explained.

The question of whether and to what extent research funding enables researchers to be more productive is a crucial one. In their recent work, Mariethoz et al. (Scientometrics, 2021. https://doi.org/10.1007/s11192-020-03.855-1 ) claim that there is no significant relationship between project-based research funding and bibliometric productivity measures and conclude that this is the result of inappropriate allocation mechanisms. In this rejoinder, we argue that such claims are not supported by the data and analyses reported in the article.

Article Attention Scores for papers don't seem to add up, leading one to question whether Altmetric data are valid, reliable, and reproducible.

In this article, we pursue two goals, namely the collection of empirical data about researchers' personal estimations of the importance of the h-index for themselves as well as for their academic disciplines, and on the researchers' concrete knowledge on the h-index and the way of its calculation.

But critics worry the metrics remain prone to misuse.

In this study, a novelty indicator to quantify the degree of citation similarity between a focal paper and a pre-existing same-domain paper from various fields in the natural sciences is applied by proposing a new way of identifying papers that fall into the same domain of focal papers using bibliometric data only.

The Matthew Effect, which breeds success from success, may rely on standing on the shoulders of others, citation bias, or the efforts of a collaborative network. Prestige is driven by resource, which in turn feeds prestige, amplifying advantage and rewards, and ultimately skewing recognition.

The scientific merit of a paper and its ability to reach broader audiences is essential for scientific impact. Thus, scientific merit measurements are made by scientometric indexes, and journals are increasingly using published papers as open access (OA).

PLOS partners with Altmetrics.

The International Society for Informetrics and Scientometrics (ISSI) is an international association of scholars and professionals active in the interdisciplinary study science of science, science communication, and science policy.

Nations the world over are increasingly turning to quantitative performance-based metrics to evaluate the quality of research outputs, as these metrics are abundant and provide an easy measure of ranking research. In 2010, the Danish Ministry of Science and Higher Education followed this trend and began portioning out a percentage of the available research funding according to how many research outputs each Danish university produces. Not all research outputs are eligible: only those published in a curated list of academic journals and publishers, the so-called BFI list, are included. The BFI list is ranked, which may create incentives for academic authors to target certain publication outlets or publication types over others. In this study we examine the potential effect these relatively new research evaluation methods have had on the publication patterns of researchers in Denmark. The study finds that publication behaviors in the Natural Sciences & Technology, Social Sciences and Humanities (SSH) have changed, while the Health Sciences appear unaffected. Researchers in Natural Sciences & Technology appear to focus on high impact journals that reap more BFI points. While researchers in SSH have also increased their focus on the impact of the publication outlet, they also appear to have altered their preferred publication types, publishing more journal articles in the Social Sciences and more anthologies in the Humanities.

Paper concludes that metrics were applied chiefly as a screening tool to decrease the number of eligible candidates and not as a replacement for peer review.

The article discusses how the interpretation of 'performance' from a presentation using accurate but summary bibliometrics can change when iterative deconstruction and visualization of the same dataset is applied.

What research caught the public imagination in 2020? Check out Altmetrics' annual list of papers with the most attention.

The active use of metrics in everyday research activities suggests academics have accepted them as standards of evaluation, that they are “thinking with indicators”. Yet when asked, many academics profess concern about the limitations of evaluative metrics and the extent of their use.

Journal rankings are a rigged game. The blacklist of history of economic thought journals isn’t a fluke nor a conspiracy - it exposes how citation rankings really work.

"Science, research and innovation performance of the EU, 2018" (SRIP) analyses Europe’s performance dynamics in science, research and innovation and its drivers, in a global context.

A novel set of text- and citation-based metrics that can be used to identify high-impact and transformative works. The 11 metrics can be grouped into seven types: Radical-Generative, Radical-Destructive, Risky, Multidisciplinary, Wide Impact, Growing Impact, and Impact (overall).

Elsevier has become the newest customer of Impactstory's Unpaywall Data Feed, which provides a weekly feed of changes in Unpaywall, our open database of 20 million open access articles.

To what extent does the academic research field of evaluative citation analysis confer legitimacy to research assessment as a professional practice?