Responsible Research Assessment Faces the Acid Test

The University of Liverpool is planning to make lay-offs on the basis of controversial measures. How should the global movement for responsible research respond?

Send us a link

The University of Liverpool is planning to make lay-offs on the basis of controversial measures. How should the global movement for responsible research respond?

For the first time prestigious funder has explicitly told academics they must not include metric when applying for grants.

A new study of grants awarded to early-career researchers by Europe's premier science agency is reviving an old controversy over the way governments decide which scientists get research money, and which do not.

Wider take-up by academics requires both relevance to specific disciplines and accessibility across disciplines, says Camille Kandiko Howson.

Acting as a reviewer is considered a substantial part of the role-bundle of the academic profession. However, little is known about academics' motivation to act as reviewers.

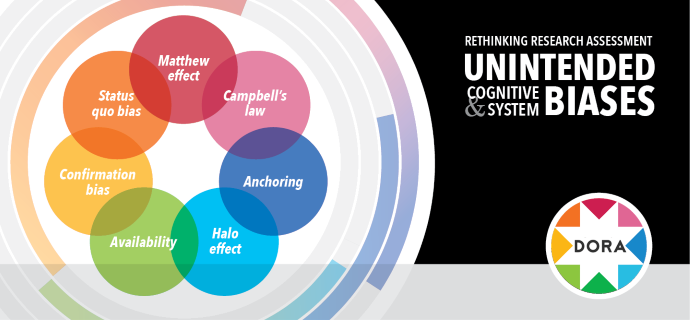

When it comes to mistaken judgments, there is more than one kind of error.

A responsible research assessment would incentivise, reflect and reward the plural characteristics of high-quality research, in support of diverse and inclusive research cultures.

Systems for assessing scientists' work must properly account for a lost year of research - especially for female researchers.

In recent years, numerous initiatives have highlighted linguistic biases embedded in current evaluation processes and have called for change. The DORA-hosted community discussion on multilingualism in scholarly evaluation was inspired by actions others have taken to address these issues.

What are the pitfalls of using AI as a citation evaluation tool?

A better balance between teaching and research duties, greater recognition of team performances and the elimination of simplistic assessment criteria would improve the systems of recognition and rewards in academia.

The Official PLOS Blog studies how researchers evaluate both the credibility and impact of research outputs.

Part one of a four part series on major barriers to equitable decision-making in hiring, review, promotion, and tenure processes that commonly result from biased thinking in academia. Part one delves into objective comparisons.

Purely metric-based research evaluation schemes potentially lead to a dystopian academic reality, leaving no space for creativity and intellectual initiative, claims a new study.

The Swiss National Science Foundation (SNSF) set out to examine whether the gender of applicants and peer reviewers and other factors influence peer review of grant proposals submitted to a national funding agency.

Recognizing the many ways that researchers (and others) contribute to science and scholarship has historically been challenging but we now have options, including CRediT and ORCID.

DORA has evolved into an active initiative that gives practical advice to institutions on new ways to assess and evaluate research. This article outlines a framework for driving institutional change.

Hong Kong Principles seek to replace 'publish or perish' culture.

Universities and research funders are increasingly reconsidering the relevance and importance of researchers' contributions when assessing them for hiring, promotion or funding.

As scientists, we try to make sure our research is rigorous so that we can avoid costly errors. We should take the same approach to tackle issues in research culture, says Professor Christopher Jackson.

Study found that preliminary criterion scores fully account for racial disparities - yet do not explain all of the variability - in preliminary overall impact scores.

Critics of current methods for evaluating researchers’ work say a system that relies on bibliometric parameters favours a ‘quantity over quality’ approach, and undervalues achievements such as social impact and leadership.

A researcher from the Wuhan University of China offers a view of how Chinese researchers are reacting and are likely to alter their behavior in response to new policies governing research evaluation.

The Swiss National Science Foundation is testing a new CV format for researchers applying for project funding in biology and medicine (submission deadline 01 April 2020).

New study says student evaluations of teaching are still deeply flawed measures of teaching effectiveness, even when we assume they are unbiased and reliable.

Citations are ubiquitous in evaluating research, but how exactly they relate to what they are thought to measure is unclear. This article investigates the relationships between citations, quality, and impact using a survey with an embedded experiment.

Researchers are used to being evaluated based on indices like the impact factors of the scientific journals in which they publish papers and their number of citations. A team of 14 natural scientists from nine countries are now rebelling against this practice, arguing that obsessive use of indices is damaging the quality of science.

Failed funding applications are inevitable, but perseverance can pay dividends.