eLife to Lose Impact Factor Due to Peer Review Change

Journal’s policy of publishing all reviewed submissions irrespective of quality conflicts with citation metric’s rules.

Send us a link

Journal’s policy of publishing all reviewed submissions irrespective of quality conflicts with citation metric’s rules.

A more nuanced balance between the use of metrics and peer review in research assessment might be needed.

Critics say redundancies are being decided using unreliable measures related to funding and citations, highlighting broader unease about the use of metrics in science.

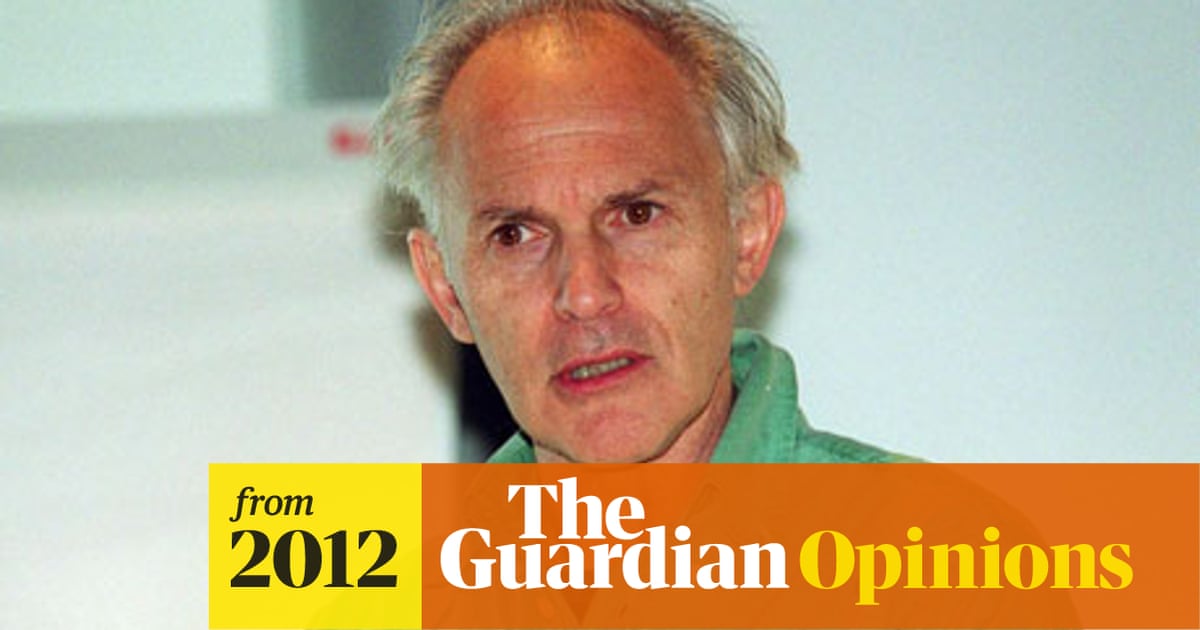

Philip Ball: It was meant to bring rigour to the tricky question of who deserves a grant or a post, but is the h-index's numerical score simplistic?

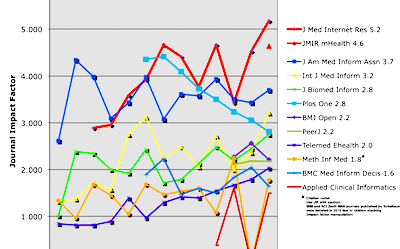

In academia, decisions on promotions are influenced by the citation impact of the works published by the candidates. The authors examine whether the journal impact factor rank could be replaced with the relative citation ratio, an article-level measure of citation impact developed by the National Institutes of Health.

Purely metric-based research evaluation schemes potentially lead to a dystopian academic reality, leaving no space for creativity and intellectual initiative, claims a new study.

UCLA professor of Law and Communication Mario Biagioli dissects how metric-based evaluations are shaping university agendas.

This paper presents a state-of-the-art analysis of the presence of 12 kinds of altmetric events for nearly 12.3 million Web of Science publications published between 2012 and 2018.

Was there ever a golden age of unsullied science, as a book implies?

At least two more journals are fighting decisions by Clarivate — the company behind the Impact Factor — to suppress them from the 2019 list of journals assigned a metric that many rightly or wrongly consider career-making.

Novelty indicators are increasingly important for science policy. This paper challenges the indicators of novelty as an atypical combination of knowle…

Publication questions the reliability of Impact Factor (IF) rankings given the high IF sensitivity to a few papers that affects thousands of journals.

Critics of current methods for evaluating researchers’ work say a system that relies on bibliometric parameters favours a ‘quantity over quality’ approach, and undervalues achievements such as social impact and leadership.

Love it or hate it, the H-index has become one of the most widely used metrics in academia for measuring the productivity and impact of researchers. But when Jorge Hirsch proposed it as an objective measure of scientific achievement in 2005, he didn’t think it would be used outside theoretical physics.

A researcher from the Wuhan University of China offers a view of how Chinese researchers are reacting and are likely to alter their behavior in response to new policies governing research evaluation.

Academic scientists and research institutes are increasingly being evaluated using digital metrics, from bibliometrics to patent counts. These metrics are often framed, by science policy analysts, economists of science as well as funding agencies, as objective and universal proxies for scientific worth, potential, and productivity.

Altmetrics have been an important topic in the context of open science for some time.

The publication output of doctoral students is increasingly used in selection processes for funding and employment in their early careers.

In recent years, the full text of papers are increasingly available electronically which opens up the possibility of quantitatively investigating citation contexts in more detail.

Originality has self-evident importance for science, but objectively measuring originality poses a formidable challenge.

Citation metrics have value because they aim to make scientific assessment a level playing field, but urgent transparency-based changes are necessary to ensure that the data yields an accurate picture. One problematic area is the handling of self-citations.

The first version of our metadata input schema (a DTD, to be specific) was created in 1999 to capture basic bibliographic information and facilitate matching DOIs to citations. Over the past 20 years the bibliographic metadata we collect has deepened, and we've expanded our schema to include funding information, license, updates, relations, and other metadata. Our schema isn't as venerable as a MARC record or as comprehensive as JATS, but it's served us well.

Crossref strives for balance. Different people have always wanted different things from us and, since our founding, we have brought together diverse organizations to have discussions-sometimes contentious-to agree on how to help make scholarly communications better. Being inclusive can mean slow progress, but we've been able to advance by being flexible, fair, and forward-thinking. We have been helped by the fact that Crossref's founding organizations defined a clear purpose in our original certificate of incorporation, which reads:

Citation metrics are widely used and misused. This Community Page article presents a publicly available database that provides standardized information on multiple citation indicators and a composite thereof, annotating each author according to his/her main scientific field(s).