Science's Golden Oldies: the Decades-old Research Papers Still Heavily Cited Today

Science's Golden Oldies: the Decades-old Research Papers Still Heavily Cited Today

Send us a link

Ukrainian researchers urge publishing sector giants to “end de facto cooperation with Russia”.

Strong evidence suggests that women are not cited less per article than men, but that they accumulate fewer citations over time and at the career level. Cary Wu argues that a focus on research productivity is key to understanding and closing the gender citation gap.

Academic peer review is seriously undertheorized because peer review studies focus on discovering and confirming phenomena, such as biases, and are much less concerned with explaining, predicting, or controlling phenomena on a theoretical basis.

ERROR is a bug bounty program for science to systematically detect and report errors in academic publications.

The UK Government’s research evaluation system encourages a higher quantity and lower quality of work from academics, according to a recent paper.

Can science papers be more transparent with respect to who thought of each idea, who ran each experiment, and who analysed the data?

A culture of fear around corrections and retractions is hampering efforts to maintain the integrity of scientific research.

By Frontiers' science writers As part of Frontiers' passion to make science available to all, we highlight just a small selection of the most fascinating research published with us each month to help inspire current and future researchers to achieve their research dreams. 2022 was no different, and saw many game-changing discoveries contribute to the

Does forcing students to mandatorily publish a research paper before thesis submission lead to a high-quality PhD thesis, or does high-quality PhD work lead to publications in good journals? This question is unlike the chicken...

The White House painted an incomplete economic picture of its new policy for free, immediate access to research produced with federal grants. Will publishers adapt their business models to comply, or will scholars be on the hook?

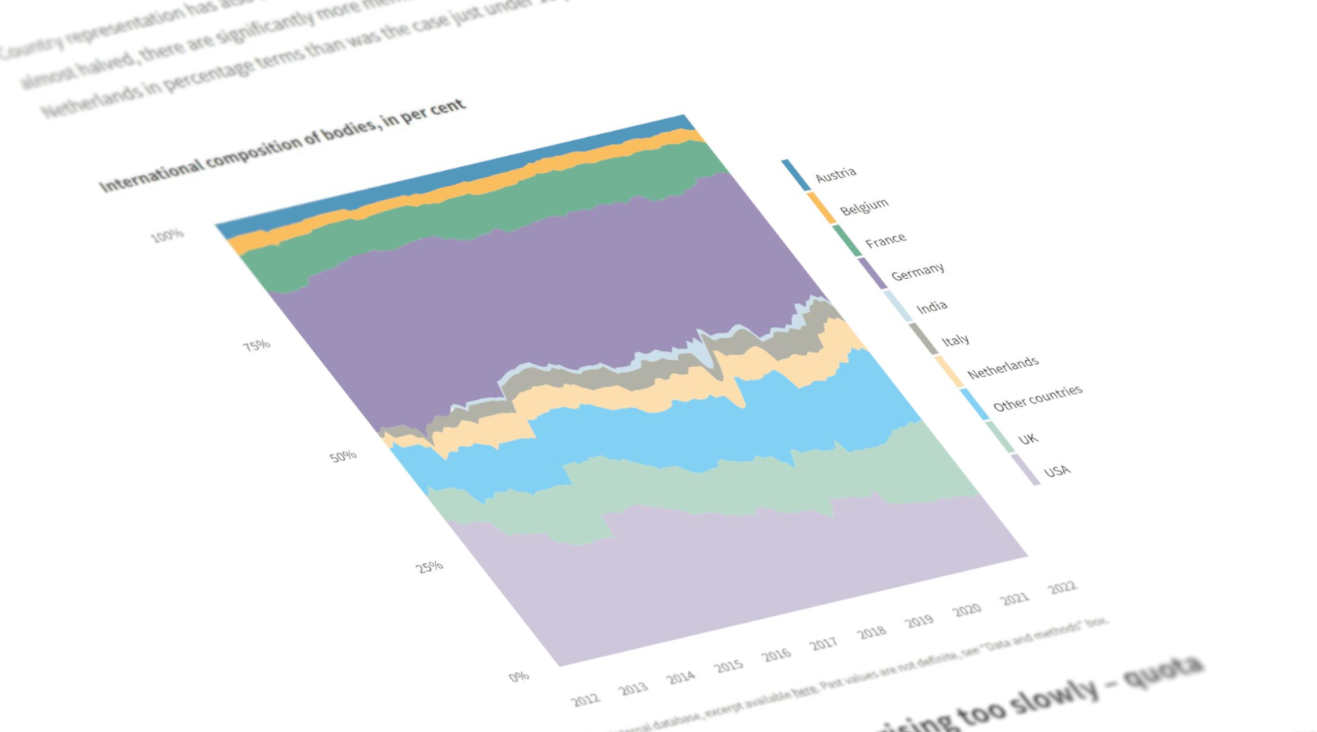

The SNSF's National Research Council decides whether or not to fund applications. The 89 evaluation panels handle the preparatory work on which it bases its decisions, assessing several thousand applications each year.

More than 80% of scientific papers stemming from Horizon 2020 funded projects were published in open access journals, according to the European Commission in a new report.

Effort aims to identify what's ethical and legal-and what's not.

Papers accepted by journals before results are known rate higher on rigor than standard studies.

There are good reasons for why academicians should care about citations to scholarly articles. An important one is that members of the academy operate essentially as independent contractors.

Nations the world over are increasingly turning to quantitative performance-based metrics to evaluate the quality of research outputs, as these metrics are abundant and provide an easy measure of ranking research. In 2010, the Danish Ministry of Science and Higher Education followed this trend and began portioning out a percentage of the available research funding according to how many research outputs each Danish university produces. Not all research outputs are eligible: only those published in a curated list of academic journals and publishers, the so-called BFI list, are included. The BFI list is ranked, which may create incentives for academic authors to target certain publication outlets or publication types over others. In this study we examine the potential effect these relatively new research evaluation methods have had on the publication patterns of researchers in Denmark. The study finds that publication behaviors in the Natural Sciences & Technology, Social Sciences and Humanities (SSH) have changed, while the Health Sciences appear unaffected. Researchers in Natural Sciences & Technology appear to focus on high impact journals that reap more BFI points. While researchers in SSH have also increased their focus on the impact of the publication outlet, they also appear to have altered their preferred publication types, publishing more journal articles in the Social Sciences and more anthologies in the Humanities.

Indonesia has seen progress in open research ecosystem development. More needs to be done.

Policymakers are beginning to put monetary value on scientific publications. What does this mean for researchers?

This paper presents a state-of-the-art analysis of the presence of 12 kinds of altmetric events for nearly 12.3 million Web of Science publications published between 2012 and 2018.

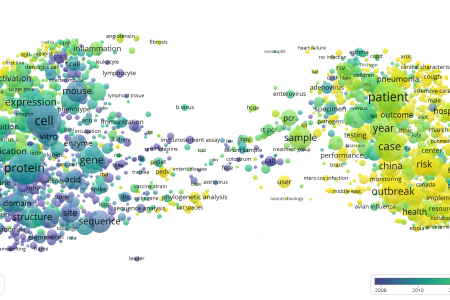

The current pandemic has exposed a host of issues with the current scholarly communication system, also with regard to the discoverability of scientific knowledge. Many research groups have pivoted to Covid-19 research without prior experience or adequate preparation. They were immediately confronted with two discovery challenges: (1) having to identify relevant knowledge from unfamiliar (sub-)disciplines with their own terminology and publication culture, and (2) having to keep up with the rapid growth of data and publications and being able to filter out the relevant findings.

The coronavirus pandemic has posed a special challenge for scientists: Figuring out how to make sense of a flood of scientific papers from labs and scientists unfamiliar to them.

In the current system of pre-publication peer review, which papers are scrutinized most thoroughly?

Many initiatives are keeping track of research on COVID-19 and coronaviruses. These initiatives, while valuable because they allow for fast access to relevant research, pose the question of subject delineation. We analyse here one such initiative, the COVID-19 Open Research Dataset (CORD-19).

Online sleuths have discovered what they suspect is a paper mill that has produced more than 400 scientific papers with potentially fabricated images. Some journals are now investigating the papers.