Data Visualization Tools Drive Interactivity and Reproducibility

New tools for building interactive figures and software make scientific data more accessible, and reproducible.

Send us a link

New tools for building interactive figures and software make scientific data more accessible, and reproducible.

Scientific research can be a cutthroat business, with undue pressure to publish quickly, first, and frequently. PLOS Biology is now formalizing a policy whereby manuscripts that confirm or extend a recently published study are eligible for consideration.

It’s not true that efforts to reform research may “end up destroying new ideas before they are fully explored.” In defense of the replication movement.

Replication is not enough. Marcus R. Munafò and George Davey Smith state the case for triangulation.

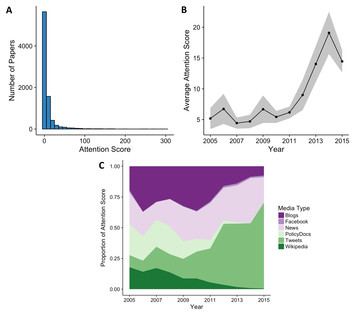

A new online tool measures the reproducibility of published scientific papers by analyzing data about articles that cite them.

Han Chunyu retracted disputed ‘breakthrough’ research but still enjoys support from university and local government.

Reproducible research includes sharing data and code. The reproducibility policy at the journal Biostatistics rewards articles with badges for data and code sharing. This study investigates the effect of badges at increasing reproducible research, specifically, data and code sharing, at Biostatistics.

A new paper recommends that the label “statistically significant” be dropped altogether; instead, researchers should describe and justify their decisions about study design and interpretation of the data, including the statistical threshold.

The systematic replication of other researchers’ work should be a normal part of science. That is the main message of an advisory report by the Dutch Academy of Sciences.

When rewards such as funding of grants or publication in prestigious journals emphasize novelty at the expense of testing previously published results, science risks developing cracks in its foundation.

The use of outdated computational tools is a major offender in science’s reproducibility crisis-and there’s growing momentum to avoid it.

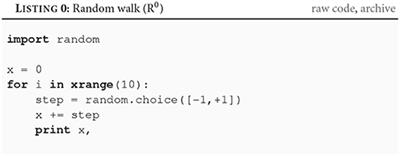

Article enumerating five characteristics that a scientific code in computational science should possess.

The Neuroskeptic commentary on a new paper by Chris Drummond about the ‘reproducibility movement’. Assuming that what really matters is the testability of a given hypothesis, how fundamental is reproducibility to science?

ReScience resides on GitHub where each new implementation of a computational study is made available together with comments, explanations, and software tests.

We wish to answer this question: If you observe a ‘significant’ p -value after doing a single unbiased experiment, what is the probability that your result is a false positive?

When a clinical trial falters, doctors find themselves sifting through the rubble.

According to its developers, Statcheck gets it right in more than 95% of cases. Some outsiders still aren’t convinced.

The Project Jupyter team shares its reboot of Binder, a tool allowing researchers to make their GitHub repositories executable by others.

"It is not statistics that is broken, but how it is applied to science." - S. Goodman

When Dutch researchers developed an open-source algorithm designed to flag statistical errors in psychology papers, it received mixed reaction from the research community.

Adequate sample size is key to reproducible research findings: low statistical power can increase the probability that a statistically significant result is a false positive.

Here's a list of more cultural things we can do to reduce the stress/pressure around the reproducibility crisis.

Linking associated research outputs.

A review found them all flawed. Scientists who deny climate change are not modern-day Galileos.

Imagine succinct, up-to-date information, not for software projects but for modern research publications.

Psychology initiative aims to engage dozens of laboratories around the world in large-scale studies, since the “tentative, preliminary results” produced by small studies conducted in relatively isolated laboratories “just aren’t getting the job done."

Perverse incentives and the misuse of quantitative metrics have undermined the integrity of scientific research.

A challenge investigating reproducibility of empirical results submitted to the 2018 International Conference on Learning Representations.

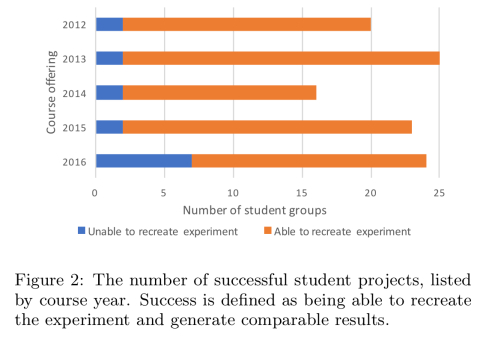

Students taking Stanford’s Advanced Topics in Networking class have to select a networking research paper and reproduce a result from it as part of a three-week pair project.